In recent years, Large Language Models (LLMs) have stood out as revolutionary tools that are capable of crafting human-quality texts, including producing coherent and contextually relevant text, translating languages elegantly, and dreaming up creative content on demand. Beneath the surface, there lies a fascinating factor that affects the nature and quality of the generated output, which is known as LLM temperature.

At its core, LLM temperature controls the balance between playing more safely and exploring new possibilities - exploration versus exploitation in the model's output. Lower temperatures favor exploiting the patterns LLMs have already learned and mastered, making the outputs more predictable and reliable. This is ideal when accurate and factual information is needed. Conversely, higher temperatures encourage exploration, meaning that the LLMs get adventurous. It ventures beyond the familiar patterns and increases the chance of being surprising and creative, potentially yielding more diverse, albeit riskier, outputs. This can be useful for brainstorming ideas.

LLMs are usually trained on large amounts of text data. They learn the patterns of how likely words appear together or apart, building a complex network of possibilities. When LLMs generate output, there are usually a few candidates in the vocabulary for each word and each candidate word has a certain likelihood of being chosen. Those likelihoods are represented by a set of logits. Then the softmax function takes the set of logits and transforms them into probabilities that sum to 1. A temperature value in the softmax function scales these logits, influencing the final possibilities calculated for each candidate word and affecting the selection of the next word in the output.

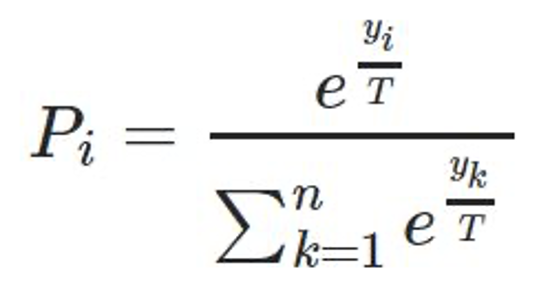

Mathematically, the softmax function for a given candidate word i with logits yi is defined as:

e is Euler's number (approximately 2.71828).

T is the LLM temperature parameter.

n is the size of the vocabulary.

From the above softmax function, we can see that the LLM temperature acts as a control mechanism. It affects the probabilities assigned to each candidate word by scaling the logits.

Lower Temperature (𝑇<1): When 𝑇 is small, the softmax function magnifies differences between logits, leading to sharper probability distributions. This means that the model becomes more confident in selecting words with higher logits, making the LLM prioritize the most probable next word and effectively reducing randomness in the generated text. As a result, lower temperatures promote the exploitation of high-confidence predictions, often yielding more deterministic and conservative outputs.

Higher Temperature (𝑇>1): On the other hand, increasing 𝑇 softens the differences between logits, resulting in flatter probability distributions. Less probable words become more likely contenders. This encourages the model to explore a wider range of word choices, even those with lower logits. Consequently, higher temperatures foster diversity in the generated text, allowing the model to produce more varied and creative outputs.

In practice, LLMs may employ different sampling strategies to incorporate LLM temperature during text generation. For example, at 𝑇=0, greedy sampling is usually employed. The model selects the word with the highest probability, effectively choosing the most confident prediction at each step.

There's no magic number for LLM temperature. The ideal setting is based on the specific goal. Choosing the most suitable LLM temperature involves balancing various factors such as coherence, diversity, and specific task requirements. While there's no one-number-fits-all solution, here are some strategies:

Coherence: If your task requires generating text that closely follows the input context or maintains a formal tone, such as summarizing research papers or writing technical reports, lower temperatures (𝑇<1 or maybe around 0.5) may be preferable to ensure high coherence and accuracy.

Creativity and Diversity: For tasks where creativity and diversity are valued, such as creative writing or brainstorming, higher temperatures (𝑇>1) can encourage the generation of more varied and innovative outputs.

Experiment with different temperature values and evaluate the quality of the generated outputs. The evaluation can be done via human or user feedback. Monitor and observe how varying temperature selections impact the qualitative feedback on the performance of the LLMs. It is also worth noting that the optimal LLM temperature may not stay the same as the context or tasks evolve. Periodic reassessment and iteration are often beneficial.

In some cases, fine-tuning the LLM temperature parameter for specific tasks or datasets may be necessary to achieve optimal performance. Train the LLM on domain-specific data and adjust the temperature based on the specific requirements of the task.

Finding the ideal temperature for an LLM is a delicate balancing act. Push it too high, and you risk nonsensical outputs; too low, and it becomes repetitive. It takes practice and experimentation to find the sweet spot. Furthermore, the LLM temperature value isn't the only factor affecting output. The prompt or question you provide to the LLM also plays a crucial role. A strong prompt with clear instructions might work well with a higher temperature, while a more open-ended one could benefit from a lower temperature for better exploration.

In our exploration of the LLM temperature, we uncover its crucial impact on the performance of LLMs. The LLM temperature serves as a critical parameter influencing the balance between predictability and creativity in generated text. Lower temperatures prioritize exploiting learned patterns, yielding more deterministic outputs, while higher temperatures encourage exploration, fostering diversity and innovation. Understanding and fine-tuning LLM temperature enables practitioners to tailor text generation to specific requirements, striking the optimal balance between coherence and novelty.