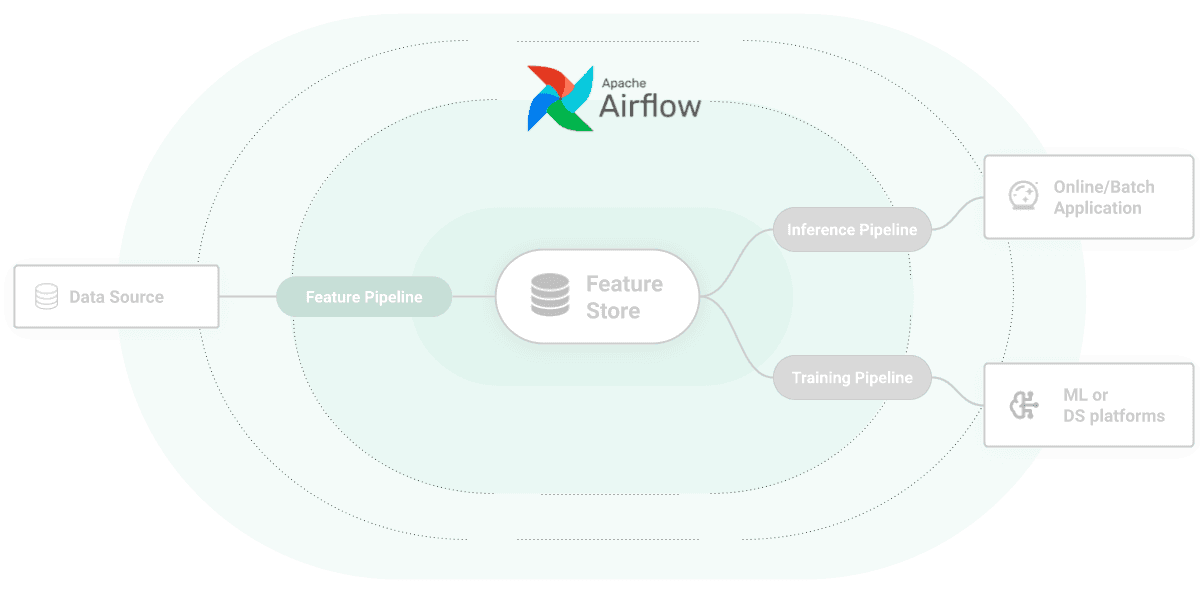

Airflow

compute-pipelines

Airflow can be used as the orchestration engine for all your machine learning pipelines (feature pipelines, training pipelines, and batch inference pipelines).

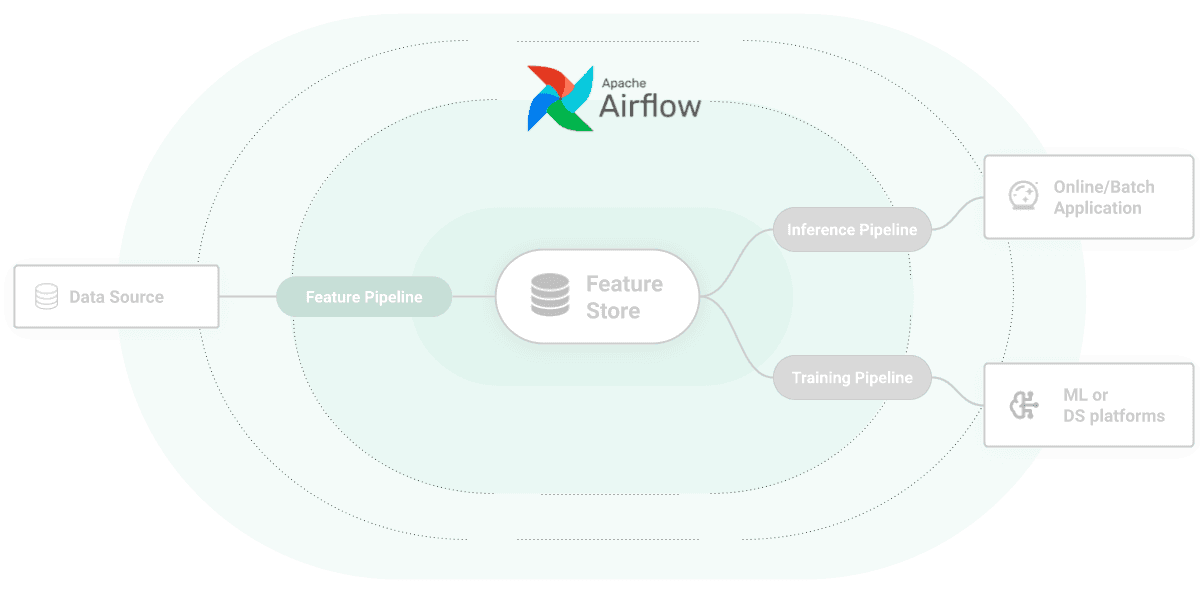

compute-pipelines

Airflow can be used as the orchestration engine for all your machine learning pipelines (feature pipelines, training pipelines, and batch inference pipelines).