High Risk AI in the EU AI Act

What is the corporate and societal significance of the EU AI Act and how does it impact organizations with high risk AI systems?

It’s been almost 18 months since we wrote about the EU AI Act. A lot has happened since that time, but now it makes sense for us to pick up the thread now: the members of parliament have actually reached an agreement with the council to pass a bill on December 9th, 2023. For sure, this is a significant milestone that will reflect on all of us in the artificial intelligence (AI) industry in the coming months and years.

Let’s explore why it is so significant and important - and recapitulate where we are.

The Importance for Society at Large

First and foremost, we should all be happy about some of the societal implications that are part of this act. There are a number of banned applications that are explicitly stated in the AI Act, and that reflect a political choice: it limits the biometric categorisation systems, facial recognition databases, emotion recognition, social scoring, manipulative propaganda and other authoritarian techniques that we would want to limit in a free and open European society. There are some exemptions to this for law enforcement - but by and large the AI Act seems to side with Civilian free liberties to a maximal extent.

The Importance to the Corporate World

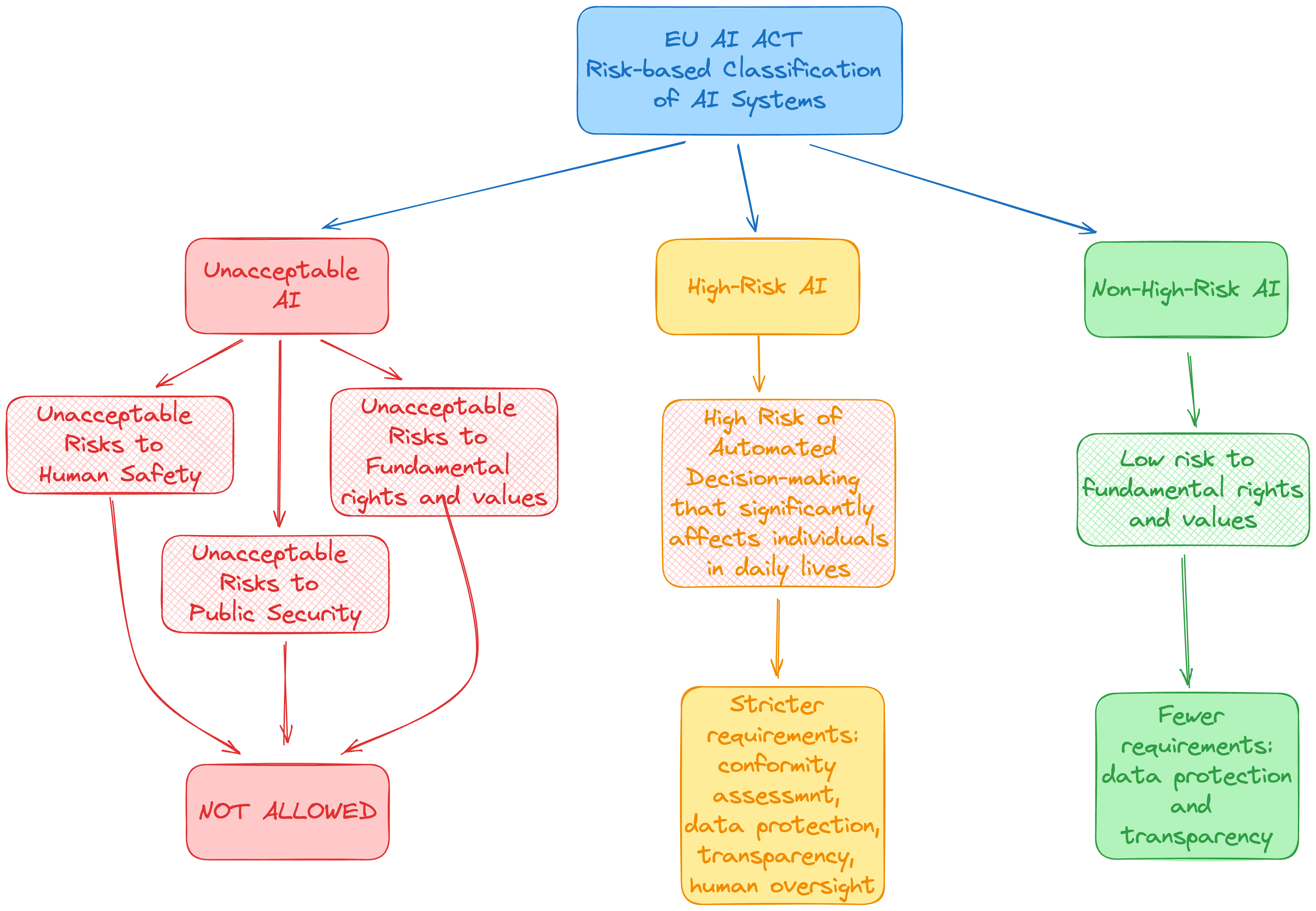

Secondly, the act will now and into the future, offer up a really important risk-based categorization of ML and AI systems. We won’t go into the limited-risk and low-risk systems in this article, as it seems clear that the highest impact on our corporate world’s IT infrastructure will come from the category of so-called high-risk AI applications. Unsurprisingly, the Act calls out quite a few of those in the current publications: looking through the Annexes document, and specifically Annex III (pages 4-5 of that document), we can clearly see that the primary impact will be in People-related processes (recruitment, selection, evaluating candidates, etc), and of course in Finance-related processes (creditworthiness analysis, customer credit scoring processes, etc.). On top of that there are many government-specific limitations with regards to law enforcement, migration and border control, justice and democratic processes.

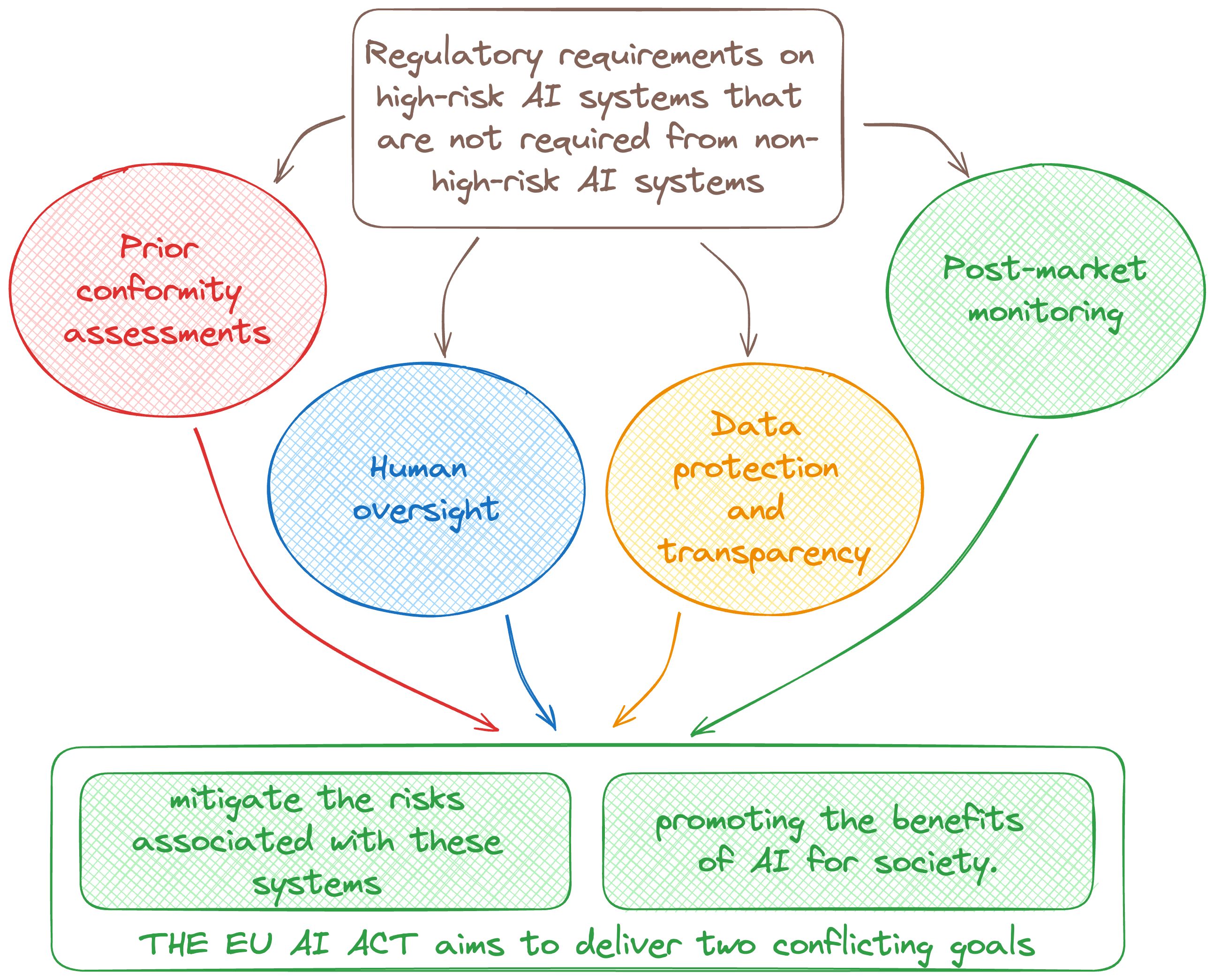

For all these high risk AI applications, there will be specific requirements put in place:

- A risk management system will need to be established, implemented, documented and maintained

- Data governance will need to be established for the training, validation and testing data sets.

- Technical documentation will need to be drawn up before the system is put on the market

- The system will need to keep accurate records / logs of all the functioning throughout the lifecycle

- The system will be transparently communicated and explained to the users

- The system will be overseen by humans during its operation

- The system will achieve an appropriate level of accuracy, robustness and cybersecurity

All of these requirements really mean that the systems need to be well-run from an operational perspective, and that haphazard usage of Machine Learning and Artificial Intelligence will need to be very limited, or non-existent, in the corporate world. At the risk of significant fines, corporate operators will need to systematically deal with all of the above aspects of their high-risk systems - or not run them at all. So let’s turn to what that could mean for our customers at Hopsworks, and for Hopsworks as a company.

Figure 1: Regulatory Requirements for High Risk AI Systems

What is High Risk AI?

It is important to know if your AI system is considered “high risk AI”, because then your AI (or machine learning) system will have to be assessed before it can be available on the market. The definition of high risk AI provided by the EU parliament is quite clear:

-

AI systems that are used in products falling under the EU’s product safety legislation. This includes toys, aviation, cars, medical devices and lifts.

-

AI systems falling into eight specific areas that will have to be registered in an EU database:

- Biometric identification and categorisation of natural persons

- Management and operation of critical infrastructure

- Education and vocational training

- Employment, worker management and access to self-employment

- Access to and enjoyment of essential private services and public services and benefits

- Law enforcement

- Migration, asylum and border control management

- Assistance in legal interpretation and application of the law.

Hopsworks is a platform for building and operating provides tailored support for high risk machine learning systems, with the most flexible and strongest Enterprise grade security model (dynamic role-based access control) of any AI platform available on-premises today.

Figure 2: EU AI Act Risk-based Classification of AI Systems

The Importance of the AI Act for Hopsworks and Its Customers

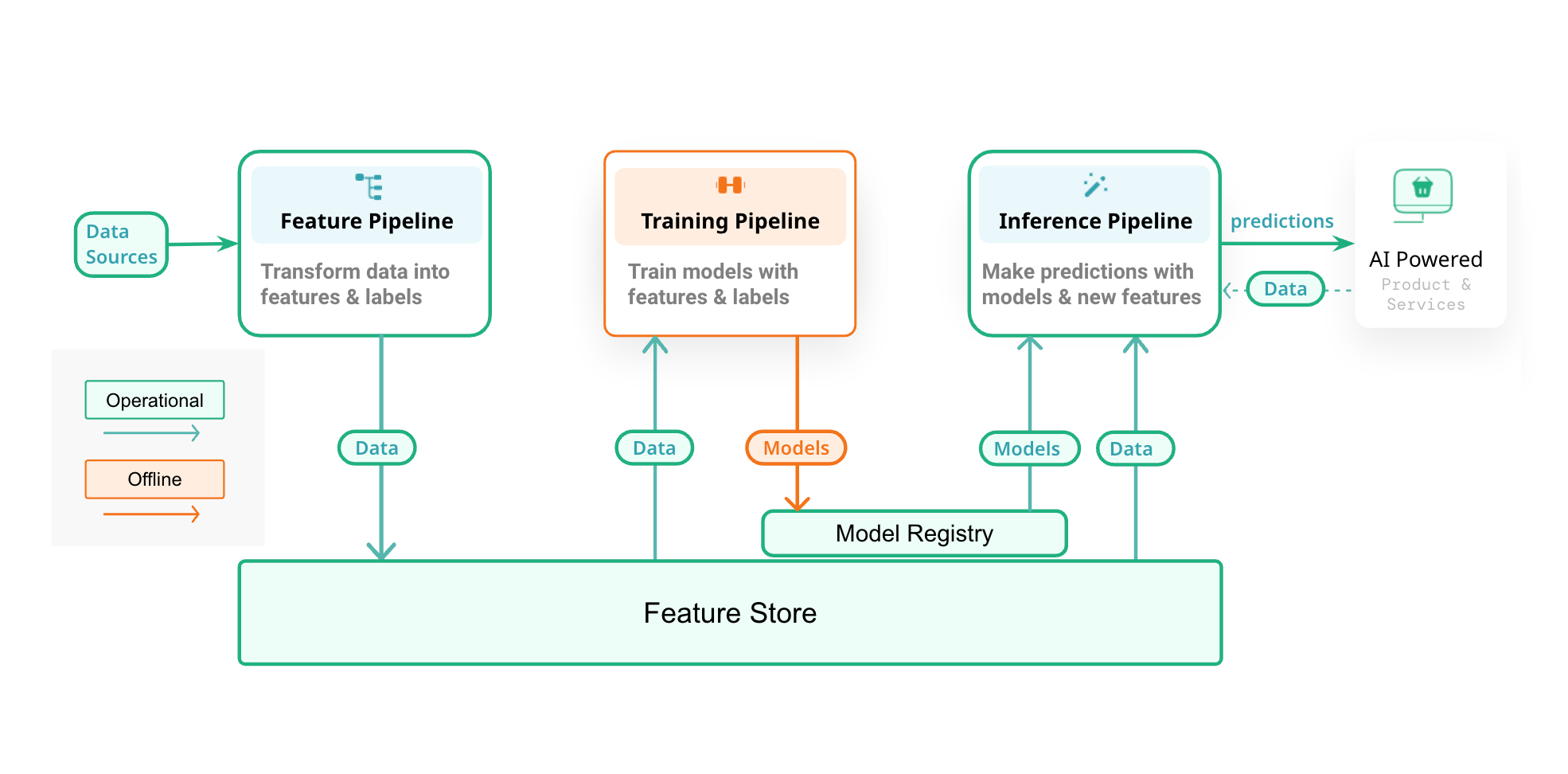

At Hopsworks, we have been building Machine Learning Systems for a long time. We like to emphasize the fact that they are not just experiments or coding playgrounds - they are actual production systems that transfer data sources into AI/ML powered products and services. We have written about this extensively on our blog on ‘MLOps to ML Systems’, and we summarize it in a comprehensive diagram:

Figure 3: Mental Map for Machine Learning (ML) Systems

When you understand and buy into the Mental Map for building these types of systems, then you also quickly understand that this will largely coincide with your map to complying with the EU AI Act, even for some of the higher-risk applications. Deploying your Machine Learning and Artificial Intelligence applications on a platform like Hopsworks, immediately gives you most if not all of the requirements that we went through earlier in this article, and does away with many of the concerns. The simple fact of running your AI as a Machine Learning System, will help you better manage the risks and govern your data processes. The same fact will allow you to better document how the AI-based business process works, and report on it based on Hopsworks logs and records. All of this is enabled with an understandable user interface that can be shared with all stakeholders, and can be run either in the cloud or on your infrastructure according to industry best practices for accuracy, robustness and security.

As such, we at Hopsworks therefore welcome the EU AI Act, and are massively looking forward to helping many clients seize the opportunity that the technology offers, in a way that meets the EU’s requirements, both in spirit and in practice. The opportunity is real and immediate!

Learn more about the EU AI Act

Read more about the EU AI Act through these sources:

- The announcement: Artificial Intelligence Act: deal on comprehensive rules for trustworthy AI | News | European Parliament,

- The website: The Act | EU Artificial Intelligence Act

- The documents: main bill and annexes

- The AI Act Presentation

- The current state of the Compliance checker: EU AI Act Compliance Checker