AI Software Architecture for Copernicus Data with Hopsworks

Hopsworks brings support for scale-out AI with the ExtremeEarth project which focuses on the most concerning issues of food security and sea mapping.

This article is based on the paper “The ExtremeEarth software Architecture for Copernicus Earth Observation Data” included in the Proceedings of the 2021 conference on Big Data from Space (BiDS 2021) [1].

Introduction

In recent years, unprecedented volumes of data are being generated in various domains. Copernicus, a European Union flagship programme, produces more than three petabytes (PB) of Earth Observation (EO) data annually from Sentinel satellites [2]. However, current AI architectures making use of deep learning in remote sensing are struggling to scale in order to fully utilize the abundance of data.

ExtremeEarth is an EU-funded project that aims to develop use-cases that demonstrate how researchers can apply deep learning in order to make use of Copernicus data in the various European Space Agency (ESA) TEPs. A main differentiator of ExtremeEarth to other such projects is the use of Hopsworks, a Data-Intensive AI software platform with a Feature Store and tooling for horizontally scale-out learning. Hopsworks has successfully been extended as part of ExtremeEarth project to bring specialized AI tools to the EO data community with 2 use cases already developed on the platform with more to come in the near future.

Bringing together a number of cutting edge technologies, which deal from storing extremely large volumes of data all the way to performing scalable machine learning and deep learning algorithms in a distributed manner, and having them operate over the same infrastructure poses some unprecedented challenges. These challenges include, in particular, integration of ESA TEPs and Data and Information Access Service (DIAS) platforms with a data platform (Hopsworks) that enables scalable data processing, machine learning and deep learning on Copernicus data; development of very large training datasets for deep learning architectures targeting the classification of Sentinel images.

In this blog post, we describe both the software architecture of the ExtremeEarth project with Hopsworks as its AI platform centerpiece and the integration of Hopsworks with the other services and platforms of ExtremeEarth that make up for a complete AI with EO data experience.

AI with Copernicus data - Software Architecture

There are several components that comprise the overall architecture with the main ones being the following.

Hopsworks. An open-source data-intensive AI platform with a feature store. Hopsworks can scale to the petabytes of data required by the ExtremeEarth project and provides tooling to build horizontally scalable end-to-end machine learning and deep learning pipelines. Data engineers and data scientists utilize Hopsworks’ client SDKs that facilitate AI data management, machine learning experiments, and productionizing serving of machine learning models.

Thematic Exploitation Platforms (TEPs). These are collaborative, virtual work environments providing access to EO data and the tools, processors, and Information and Communication Technology (ICT) resources required to work with various themes, through one coherent interface. TEPs address coastal, forestry, hydrology, geohazards, polar, urban themes, and food security themes. ExtremeEarth in particular is concerned with the polar and food security TEPs where the use cases also stem from. These use cases include building machine learning models for sea ice classification to improve maritime traffic as well as food crops and irrigation classification.

Data and Information Access Service (DIAS). To facilitate and standardize access to data, the European Commission has funded the deployment of five cloud-based platforms. They provide centralized access to Copernicus data and information, as well as to processing tools. These platforms are known as the DIAS, or Data and Information Access Services [2]. ExtremeEarth software architecture is built on CREODIAS, a Cloud infrastructure platform adapted to the processing of big amounts of EO data, including an EO data storage cluster and a dedicated Infrastructure-as-a-Service (IaaS) Cloud infrastructure for the platform’s users. The EO data repository contains Sentinel-1, 2, 3, and 5-P, Landsat-5, 7, 8, Envisat, and many Copernicus Services data.

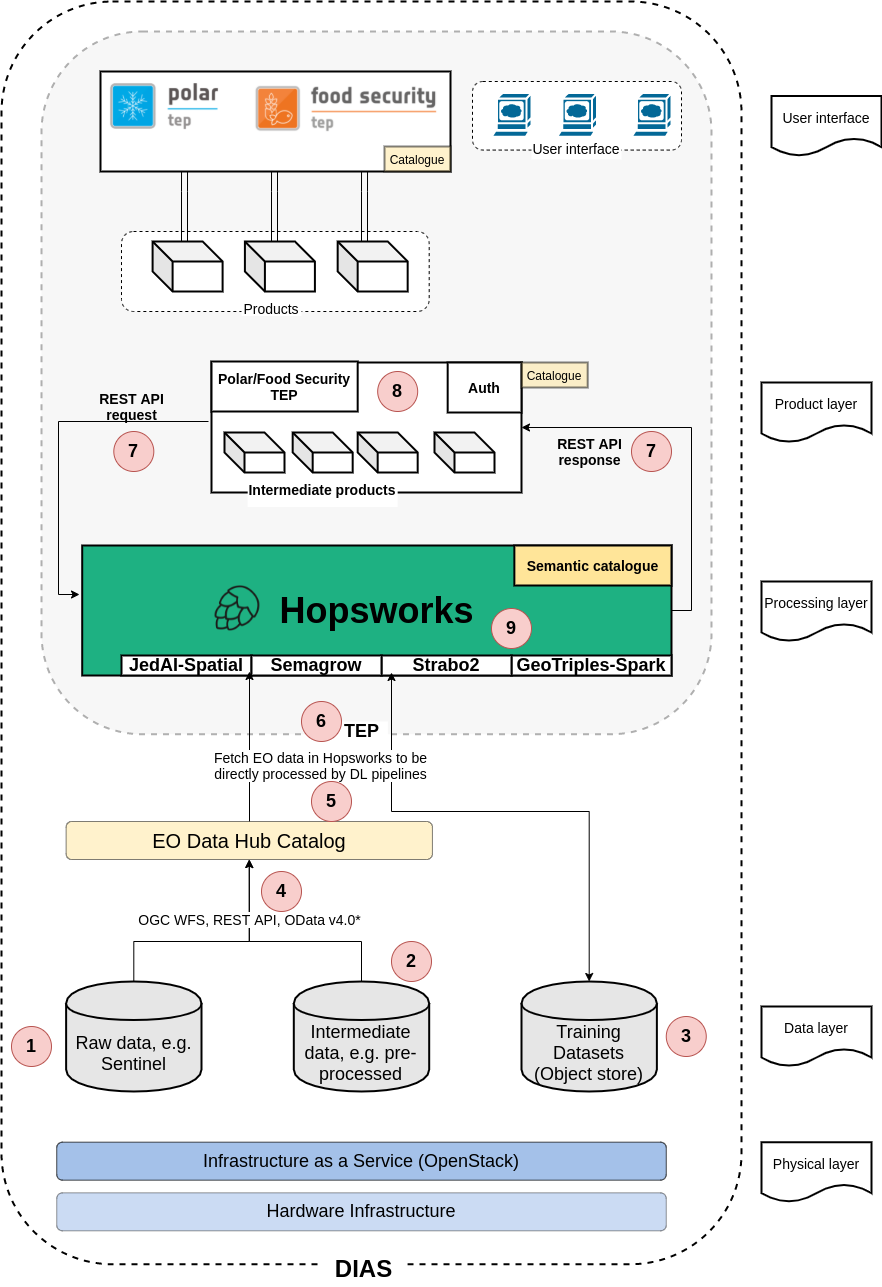

Figure 1 provides a high-level overview of the integration of the different components with each other. The components can be classified into four main categories(layers):

- Product layer. The product layer provides a collaborative virtual work environment, through TEPs, that operates in the cloud and enables access to the products, tools, and relevant EO, and non-EO data.

- Processing layer. This layer provides the Hopsworks data-intensive Artificial Intelligence (AI) platform. Hopsworks is installed within the CREODIAS OpenStack cloud infrastructure and operates alongside the TEPs. Also, Hopsworks has direct access to the data layer and the different data products provided by the TEPs.

- Data layer. The data layer offers a cloud-based platform that facilitates and standardizes access to EO data through a Data and Information Access Service (DIAS). It also provides centralized access to Copernicus data and information, as well as to processing tools. TEPs are installed and run on a DIAS infrastructure, which in the case of ExtremeEarth is the CREODIAS.

- Physical layer. It contains the cloud environment’s compute, storage, networking resources, and hardware infrastructures.

To provide a coherent environment for AI with EO data to application users and data scientists, the goal of the architecture presented here is to make most components transparent and simplify developer access by using well-defined APIs while making use of commonly used interfaces such as RESTful API. As a result, a key part of the overall architecture is how these different components can be integrated to provide a coherent whole. The APIs used for the full integration of the ExtremeEarth components via the inter-layer interfaces of the software platform are described below and also are illustrated in Figure 1.

- Raw EO data. DIASes and CREODIAS in particular, provide Copernicus data access which includes the downstream of Copernicus data as it is generated by satellites. At an infrastructure level, this data is persisted at an object store with an S3 object interface, managed by CREODIAS.

- Pre-processed data. TEPs provide the developers of the deep learning pipelines with pre-processed EO data which forms the basis for creating training and testing datasets. Developers of the deep learning pipeline can also define and execute their own pre-processing, if the pre-processed data is not already available.

- Object storage. CREODIAS provides object storage used for storing data produced and consumed by the TEPs services and applications. In ExtremeEarth, this object store is used primarily for storing training data required by the Polar and Food Security use cases. This training data is provided as input to the deep learning pipelines.

- EO Data Hub Catalog. This service is provided and managed by CREODIAS. It provides various protocols including OGC WFS and a REST API as interfaces to the EO data.

- TEP-Hopsworks EO data access. Users can directly access raw EO data from their applications running on Hopsworks. Multiple methods, e.g., object data access API (SWIFT/S3), filesystem interface, etc., are provided for accessing Copernicus and other EO-data available on CREODIAS.

- TEP-Hopsworks infrastructure Integration. Hopsworks and both the Polar and Food Security TEPs are installed and operated on CREODIAS and its administrative tools enable TEPs to spawn and manage virtual machines and storage by using CloudFerro [3] which provides an OpenStack-based cloud platform to TEPs. Hopsworks is then installed in this platform and it can access compute and storage resources provided by the TEPs.

- TEP-Hopsworks API integration. Hopsworks is provided within the TEP environment as a separate application and is mainly used as a development platform for the deep learning pipelines and applications of the Polar and Food Security use cases. These applications are exposed in the form of processors to the TEP users. Practically, a processor is a TEP abstraction that uses machine learning models that have previously been developed by the data scientists of the ExtremeEarth use cases in Hopsworks.

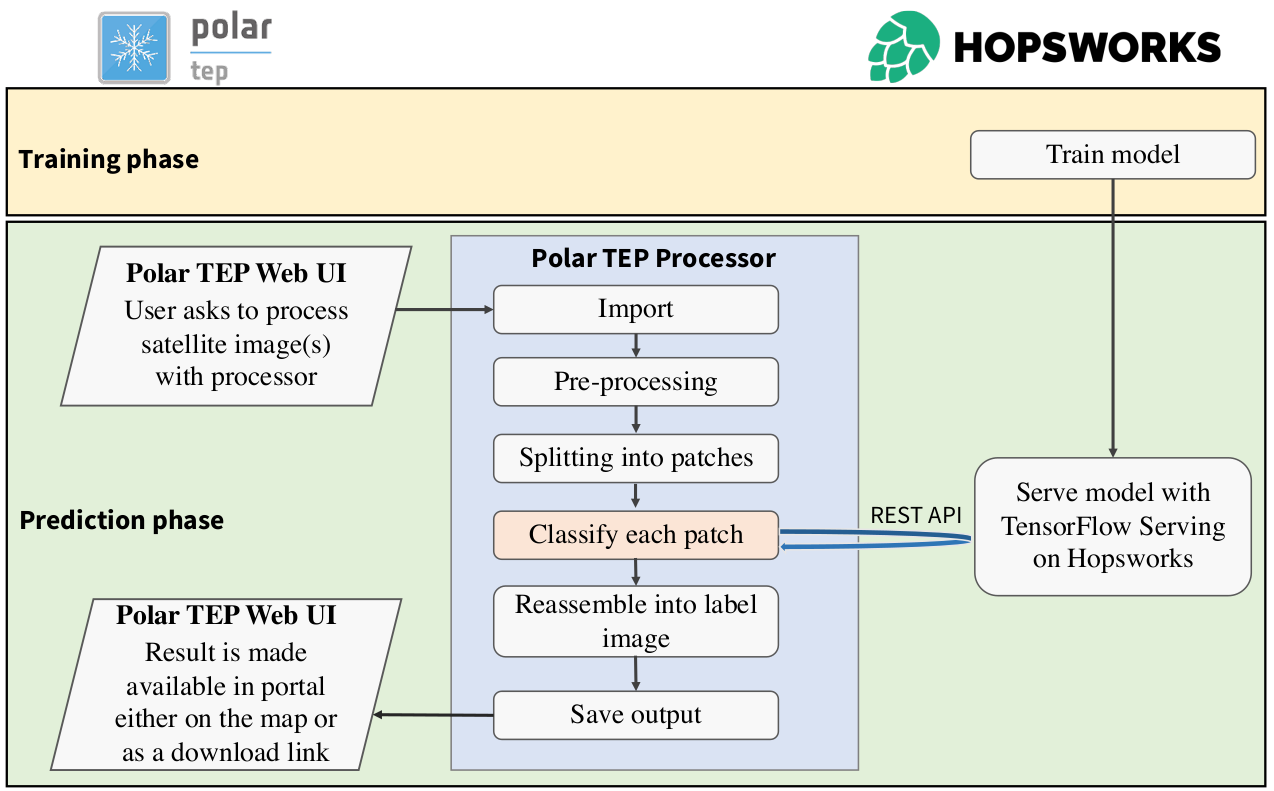

- Hopsworks-TEPs datasets. TEPs provide users with access to data to be served as input to processors from various sources. Such sources include the data provided by CREODIAS and external services that the TEP can connect to. The pre-processed data is stored in an object storage provided by CREODIAS, thus made available to Hopsworks users by exchanging authentication information. Hopsworks users can also upload their own data to be used for training or serving. Hopsworks provides a REST API for clients to work with model serving, and authentication is done in the form of API keys managed by Hopsworks on a per user basis. These keys can therefore be used by external clients to authenticate against the Hopsworks REST API. There are two ways by which the trained model can be served via the TEP: (i) The model can be exported from Hopsworks and be embedded into the TEP processor. (ii) The model can be served online on Hopsworks and a processor on the TEP submits inference requests to the model serving instance on Hopsworks and returns the results. In method (i), once the machine learning model is developed, it can then be transferred from Hopsworks to the Polar TEP by using the Hopsworks REST API and Python SDK. TEP users can integrate the Hopsworks Python SDK into the processor workflow to further automate the machine learning pipeline lifecycle. In method (ii), the TEP is able to submit inference requests to the model being served by the online model serving infrastructure run on Kubernetes and hosted on Hopsworks. Figure 2 illustrates this approach for the Polar use case

- Linked Data tools. Linked data applications are deployed as Hopsworks jobs using Apache Spark. A data scientist can work with big geospatial data using ontologies and submit GeoSPARSQL queries using tools developed and extended within ExtremeEarth, namely GeoTriples [4], Strabo2, JedAI-Spatial, and Semagrow.

Figure 1: ExtremeEarth software architecture

Figure 2: ExtremeEarth software architecture

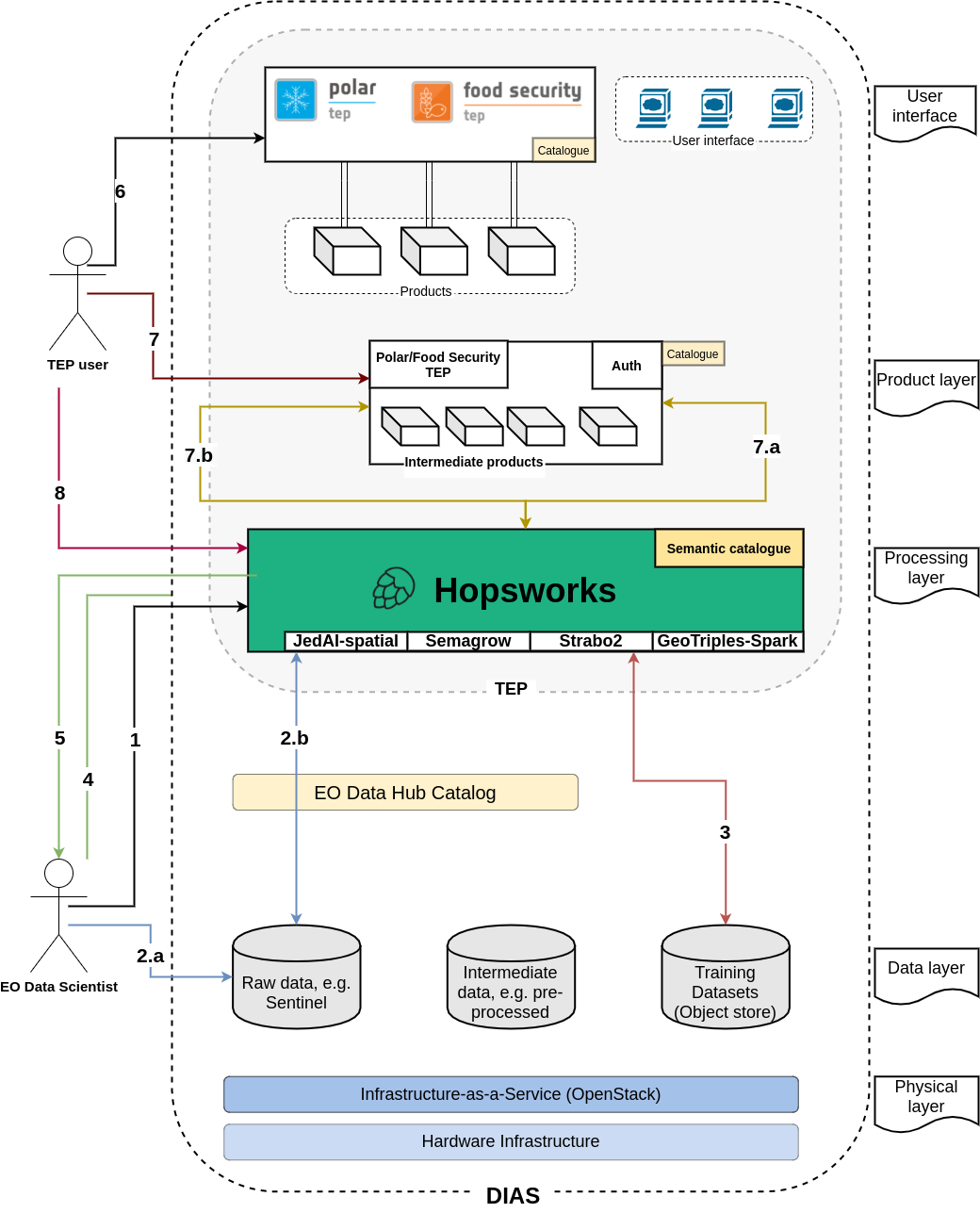

Application users that interact with the TEPs effectively are the users of the AI products generated by the machine learning and deep learning pipelines developed by the data scientists in Hopsworks. Previously we described the integration of the various components. Figure 3 depicts the flow of events within this architecture.

- EO data scientists log in to Hopsworks.

- Read and pre-process raw EO data in Hopsworks, TEP or in the local machine.

- Create training datasets based on the intermediate pre-processed data.

- Develop deep learning pipelines.

- Perform linked data transformation, interlinking, and storage.

- Log in to the Polar or Food Security TEP applications.

- Select Hopsworks as the TEP processor. The processor starts the model serving in Hopsworks via the REST API. The processor also downloads the model from Hopsworks via the REST API and serving is done within the TEP application.

- Submit federated queries with Semagrow and use the semantic catalogue built into Hopsworks.

Figure 3: ExtremeEarth software architecture flow of events

Conclusion

In this blog, we have shown how Hopsworks has been integrated with various services and platforms in order to extract knowledge from AI and build AI applications using Copernicus and Earth Observation data. Already two use cases, sea ice classification (PolarTEP) and crop type mapping and classification (Food Security TEP ), have been developed using the aforementioned architecture by using the PBs of data made available by the Copernicus programme and infrastructure.

If you would like to try it out yourself, make sure to register an account. You can also follow Hopsworks on GitHub and Twitter.