Unlocking the Power of Function Calling with LLMs

This is a summary of our latest LLM Makerspace event where we pulled back the curtain on a exciting paradigm in AI – function calling with LLMs.

This is a transcripted summary of our latest LLM Makerspace event where we pulled back the curtain on a truly exciting paradigm in AI – function calling with large language models (LLMs). We dived into how this technique not only deepens AI's capabilities but also unleashes the potential of structured enterprise data.

A Paradigm Shift with Function Calling

Function calling might ring a bell for some. It's a concept introduced around ten months ago by OpenAI with GPT-4, and it has slowly been gathering steam. At its core, function calling enriches the interaction with LLMs by allowing them to use AI and structured data to perform operations much like invoking a function in programming. This fusion results in something akin to AI calling upon AI, interwoven with a layer where structured historical data from tables dives into the mix. The result is a sophisticated RAG (Retrieval-Augmented Generation) that doesn't lean on a vector database.

So, why all the buzz around function calling? Well, it welcomes structured enterprise data into the realm of LLMs. There's been plenty of enthusiasm about employing unstructured data in vector databases to search for text relevant to a user's prompt, using similarity measures. However, that excitement hasn't quite extended to leveraging the wealth of data in tables, often found in databases or data lakes.

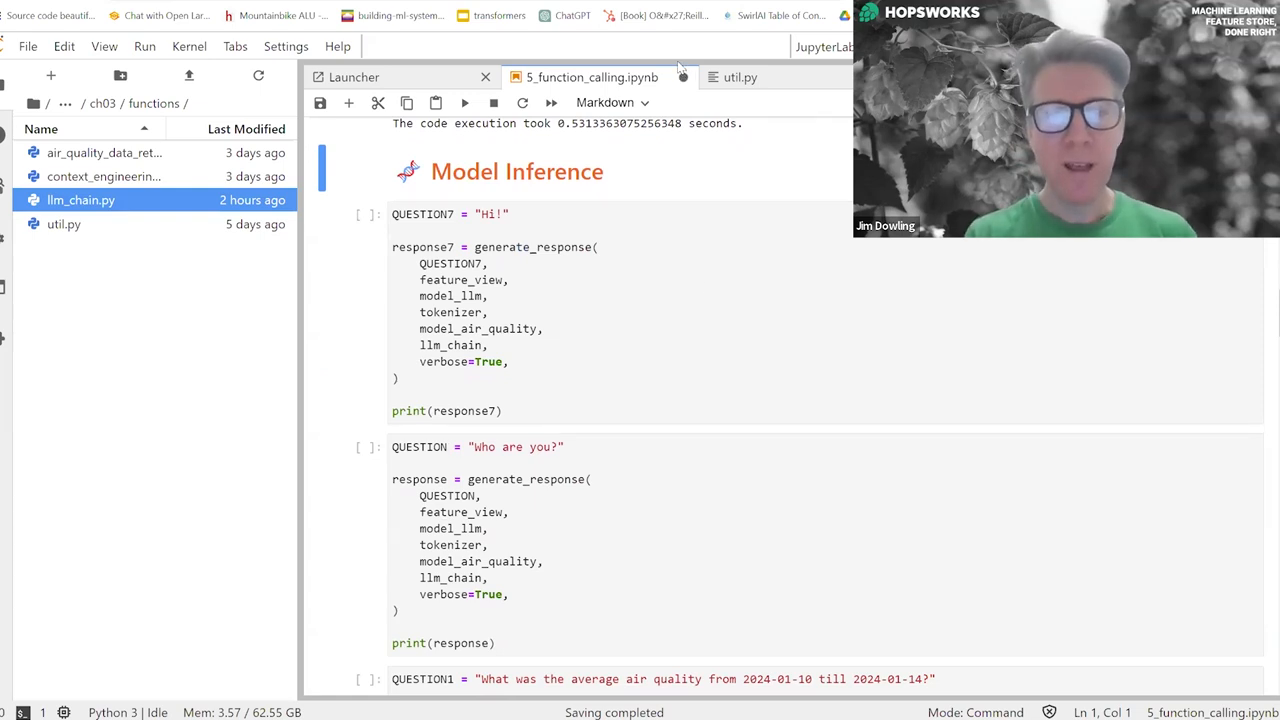

Here's how we might use Python to illustrate function calling, where ai_model.predict is a machine learning function that we call using historical data from a feature_store.

What Exactly is Function Calling, and Why Should You Care?

Function calling, in the context of LLMs, means the AI uses your text prompts to infer the appropriate function to run from available ones and spit out the necessary parameters for that function in the form of JSON. It won't actually run the function for you – you’ll need to take that JSON and trigger the function in your code. And it's all done by typing a question to which the LLM responds with JSON, which then gets put to work. Python enthusiasts will particularly appreciate how straightforward this is to execute.

"By facilitating the use of structured enterprise data with AI, function calling isn't just an innovation – it's a game-changer."

Exploring an Air Quality Prediction Application

To breathe life into this concept, let's examine an air quality prediction application where function calling takes center stage. In Stockholm (where I'm based) we care deeply about air quality, but forecasts at a street level are hard to come by. Cue our smart application – trained on historical air quality data and weather patterns – to give you a personalized forecast using LLMs and function calling.

In the application, user queries about air quality, like "Will the air quality be okay for me next week?" kickstart a chain reaction where the LLM, using function calling, interacts with a machine learning model to fetch future air quality predictions or historical data from a feature store.

Secure Function Calling in LLMs

With great power comes great responsibility. That old adage couldn’t be truer when it comes to securely implementing function calling in LLM applications. It's essential to tread carefully, especially if you enable users to call functions that dig into sensitive internal data. Data leakage is a real threat. So, vetting the functions built into your LLM applications isn't just good practice – it's crucial.

Take this snippet, for instance. It illustrates an approach to ensuring only authorized users can call functions accessing sensitive data, which is a cornerstone of secure function calling with LLMs.

Executing Function Calls: From External APIs to Personalized Data

A comprehensive LLM application that utilizes function calling does so much more than just parsing and executing written functions. It opens doors to external API calls, natural conversions of spoken language into API requests, data retrieval from databases, and even extracting structured data from unstructured text.

Personalization adds yet another layer of sophistication. By incorporating user-specific data – say, information about someone's sensitivity to air pollution – we can tailor responses to queries such as "Will the air quality be okay for me this week?" with remarkable precision. Diving into the nuts and bolts of the application, you’ll find a sweet blend of Python code, Gradio UIs, and a touch of machine learning – a shining example of practical function calling using LLMs.

Closing Thoughts

If you're itching to dissect the code and craft your own function calling-powered applications, head over to the LLM Makerspace GitHub repository, where you can explore and fork the project. Keep in mind, you'll need a Hopsworks account, which is free and comes with a generous allowance of capabilities.

As we wrap up this installment of LLM Makerspace, remember that function calling with LLMs isn't just another tech fad. It's a visionary approach that paves the way for more connected, intelligent, and responsive applications. Whether you're a data scientist, a machine learning enthusiast, or an enterprise innovator, the wonders of function calling await.

And for those on the journey of building machine learning systems, consider diving into the Building Machine Learning Systems with a Feature Store book by O'Reilly. The first chapter's on us – consider it a taste of the knowledge feast to come. Happy coding!

Watch the full episode of LLM Makerspace: