AI Pipeline

What is an AI Pipeline?

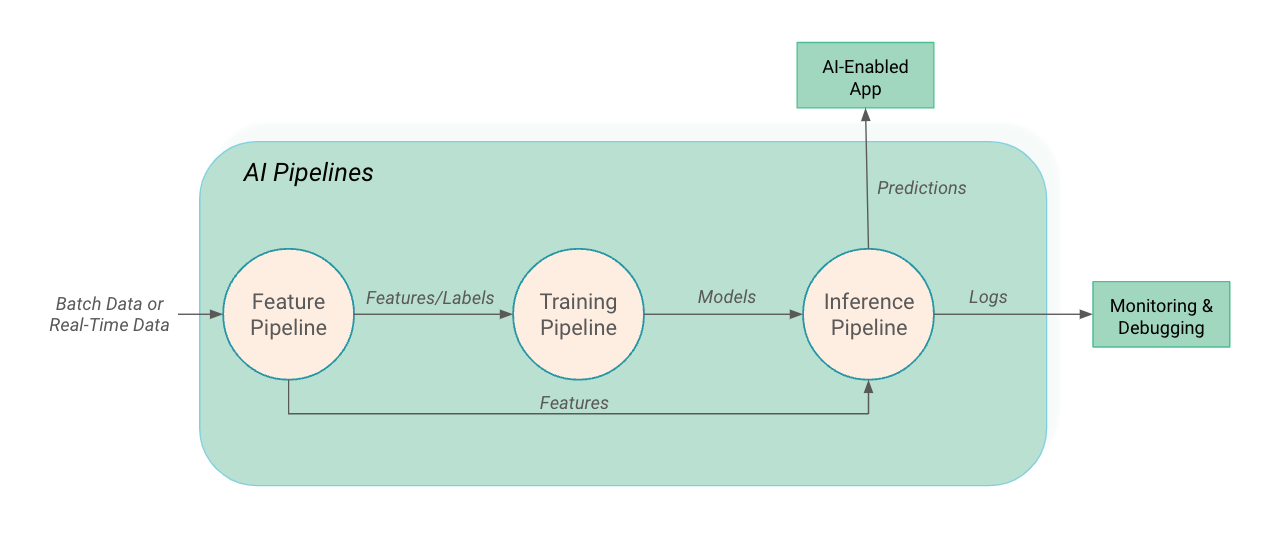

An AI Pipeline is a program that takes input and produces one or more ML artifacts as output. Typically, an AI pipeline is one of the following: a feature pipeline, a training pipeline, or an inference pipeline.

In the above figure, we can see three different examples of AI pipelines:

- a feature pipeline that takes as input raw data that it transforms into features (and labels),

- a training pipeline that takes as input features and labels, trains a model, and outputs the trained model,

- an inference pipeline that takes as input new feature data and a trained ML model, and produces as output predictions and prediction logs.

Feature pipelines can be batch programs or streaming programs. Inference pipelines can be batch programs or online inference pipelines, that wrap models made accessible via a network endpoint using model serving infrastructure.

Why are AI Pipelines important?

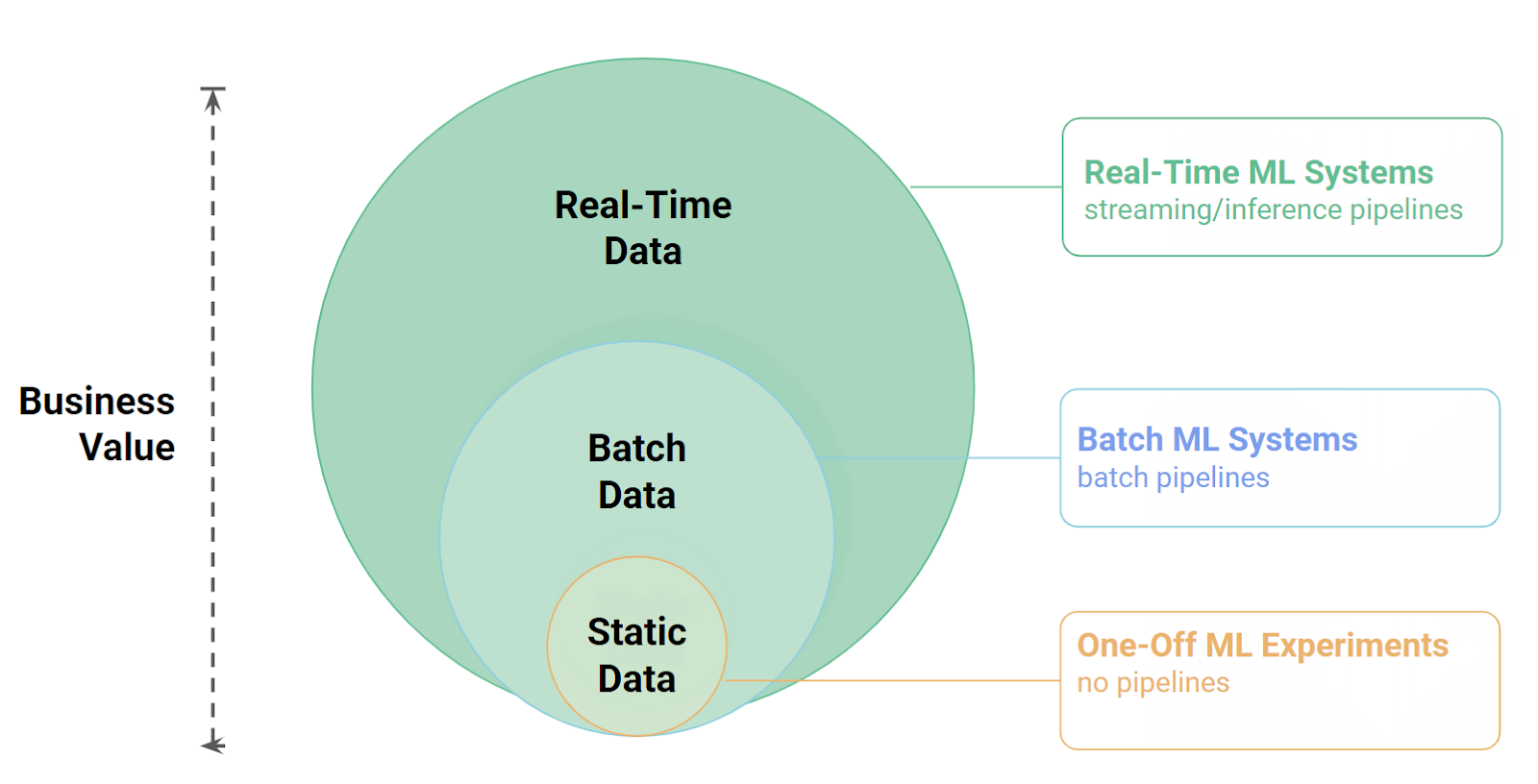

AI pipelines enable you to move from training ML models on static data and making a single prediction on your static dataset to working with dynamic data, so your model can continually generate value by making predictions on new data. AI pipelines also help ensure the reproducibility and scalability of machine learning workflows. By encapsulating the entire process in multiple pipelines, it is easier to manage, version control, and share the different stages of the process.

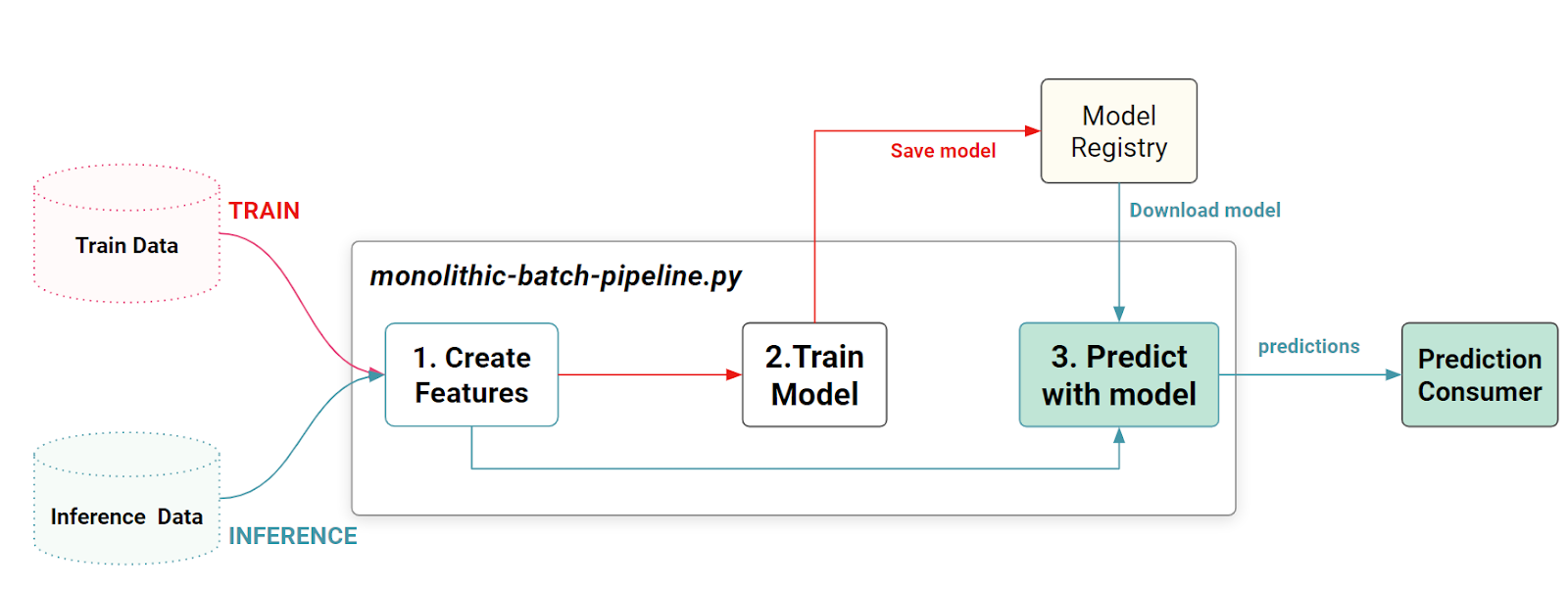

What is a monolithic ML pipeline?

A monolithic ML pipeline is a single program that can be run as either (1) a feature pipeline followed by a training pipeline or (2) a feature pipeline followed by a batch inference pipeline.

Is a Data Pipeline an AI Pipeline?

A data pipeline can be an AI pipeline, but unfortunately, the term data pipeline is too generic to clearly define what the inputs and outputs to the data pipeline are - thus making it an unclear term when communicating about AI pipelines and ML systems.

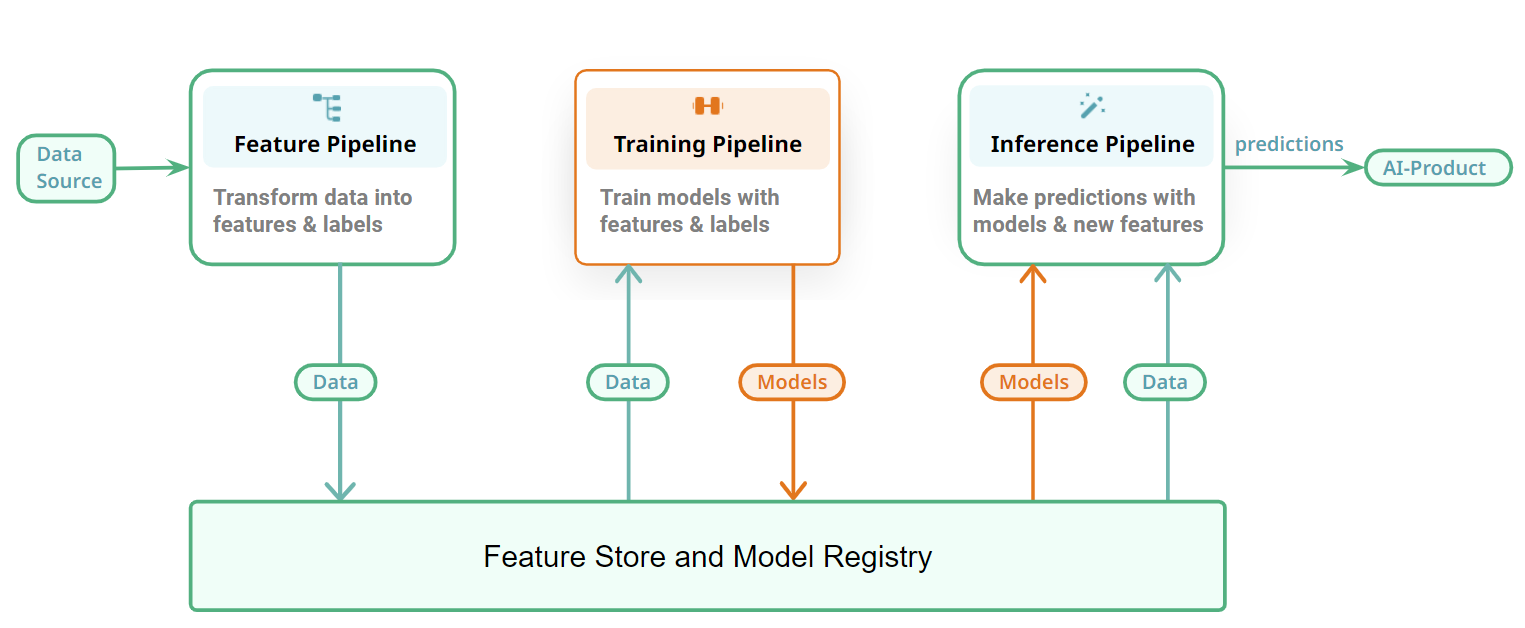

How are AI pipelines related to the feature store?

If you have a feature store, you can decompose a monolithic ML pipeline into feature, training and inference pipelines, where the output of. The feature store becomes the data layer for your AI pipelines, storing the outputs of the feature pipeline, and providing inputs to the training and inference pipelines.