Feature Type

What are feature types in machine learning?

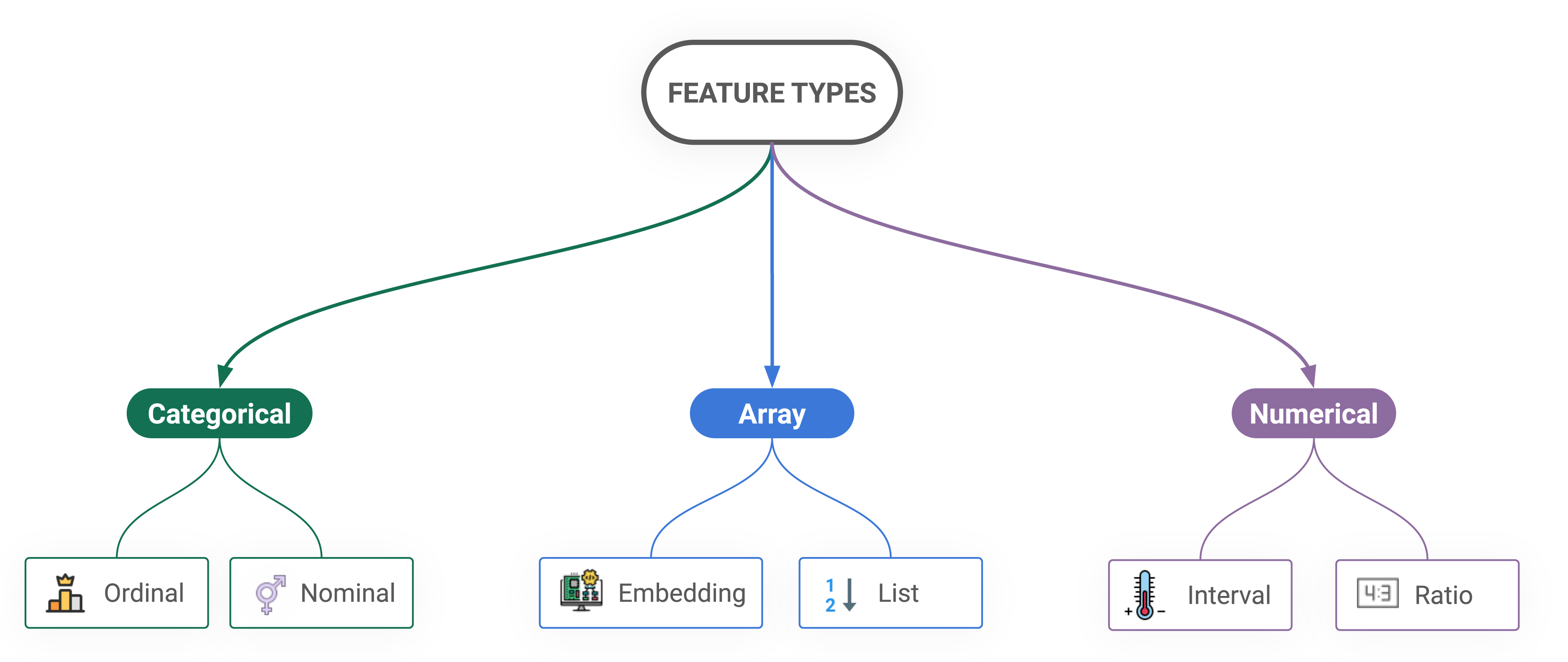

A feature type defines the set of valid encodings (model-dependent transformations) that can be performed on a feature value. The standard feature types are categorical (ordinal or nominal), numerical (interval or ratio), and array types (lists or embeddings).

The above figure is a taxonomy for data types for features; you can read more about it in the article about feature types for machine learning.

Why is it important to understand feature types?

Feature types determine how feature values can be encoded and represented in a machine learning model. Different types of features require different types of encoding and processing, and using the wrong encoding for a feature type can lead to inaccurate or suboptimal model performance.